- Registriert

- 27. Nov. 2011

- Beiträge

- 466

- Reaktionspunkte

- 20

- Punkte

- 24

Hallo,

ich habe erfolgreich den storj exporter per Docker zum Laufen gebracht und wollte nun prometheus zum Laufen bekommen.

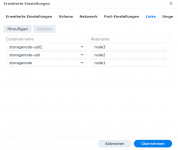

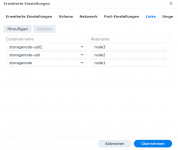

Folgende Einstellungen habe ich vorgenommen

Sobald ich den Container starte , rebootet er.

Die prometheus.yml sieht so aus:

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: 'codelab-monitor'

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first.rules"

# - "second.rules"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'storjnode1'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node1:9651']

- job_name: 'storjnode2'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node2:9651']

- job_name: 'storjnode3'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node3:9651']

Ich bin an dieser Stelle wirklich ratlos. Hat jemand eine Idee?

ich habe erfolgreich den storj exporter per Docker zum Laufen gebracht und wollte nun prometheus zum Laufen bekommen.

Folgende Einstellungen habe ich vorgenommen

Sobald ich den Container starte , rebootet er.

Die prometheus.yml sieht so aus:

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Attach these labels to any time series or alerts when communicating with

# external systems (federation, remote storage, Alertmanager).

external_labels:

monitor: 'codelab-monitor'

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first.rules"

# - "second.rules"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

- job_name: 'storjnode1'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node1:9651']

- job_name: 'storjnode2'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node2:9651']

- job_name: 'storjnode3'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['node3:9651']

Ich bin an dieser Stelle wirklich ratlos. Hat jemand eine Idee?